Yufeng XU, Yuanzhi LIU, Minghui QIN, Hui ZHAO, Wei TAO. Global Low Bias Visual/inertial/weak-positional-aided Fusion Navigation System[J]. Acta Photonica Sinica, 2024, 53(4): 0415001

Search by keywords or author

- Acta Photonica Sinica

- Vol. 53, Issue 4, 0415001 (2024)

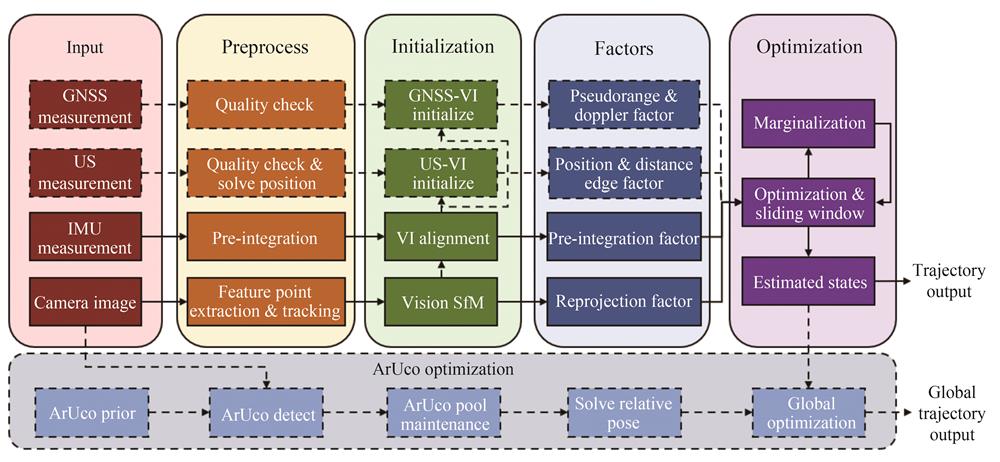

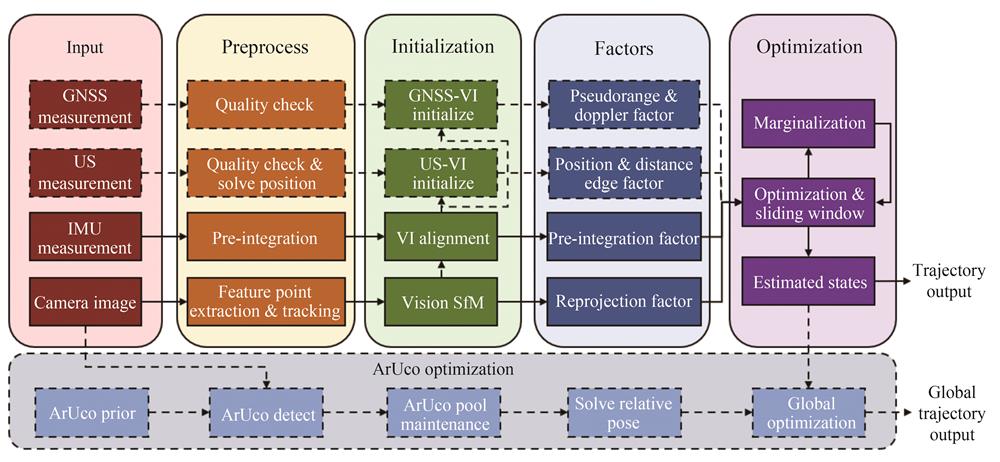

Fig. 1. Overall framework of our method

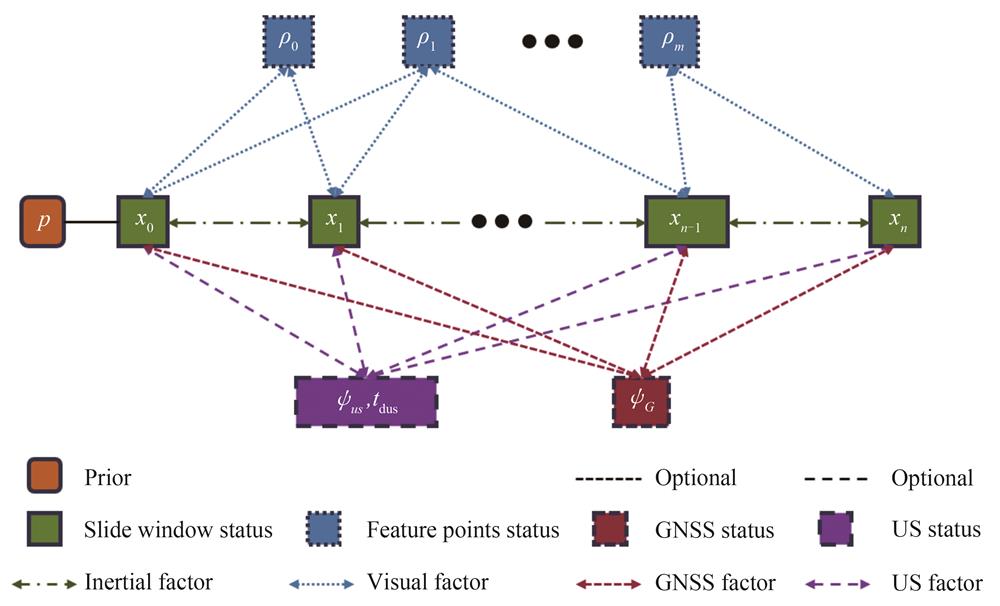

Fig. 2. Factor graph model

Fig. 3. ArUco target coordinate system definition and coordinate transformation between camera and ArUco target

Fig. 4. Diagram of the experimental site

Fig. 5. Wheeled robot platform and its sensor distribution

Fig. 6. Laser point cloud map generation and trajectory truth value generation

Fig. 7. Navigation result trajectories of each method under three scenarios

Fig. 8. Outdoor scene feature point tracking at night

Fig. 9. Examples of ArUco target distribution can be seen in the path and ArUco target detection

Fig. 10. Navigation trajectory comparison with or without ArUco assistance

|

Table 1. System hardware parameter

| |||||||||||||||||||||||||||||||||||

Table 2. Error test results under different experimental conditions

| |||||||||||

Table 3. Error test results with or without ArUco assistance

|

Table 4. The single processing run time of this method in different scenarios

Set citation alerts for the article

Please enter your email address