Jinmiao Yu, Jingjing Wu. Betel Nut Pose Recognition and Localization System Based on Structured Light 3D Vision[J]. Laser & Optoelectronics Progress, 2023, 60(16): 1615010

Search by keywords or author

- Laser & Optoelectronics Progress

- Vol. 60, Issue 16, 1615010 (2023)

Fig. 1. Structured light 3D vision sensing system and coordinate system relationship

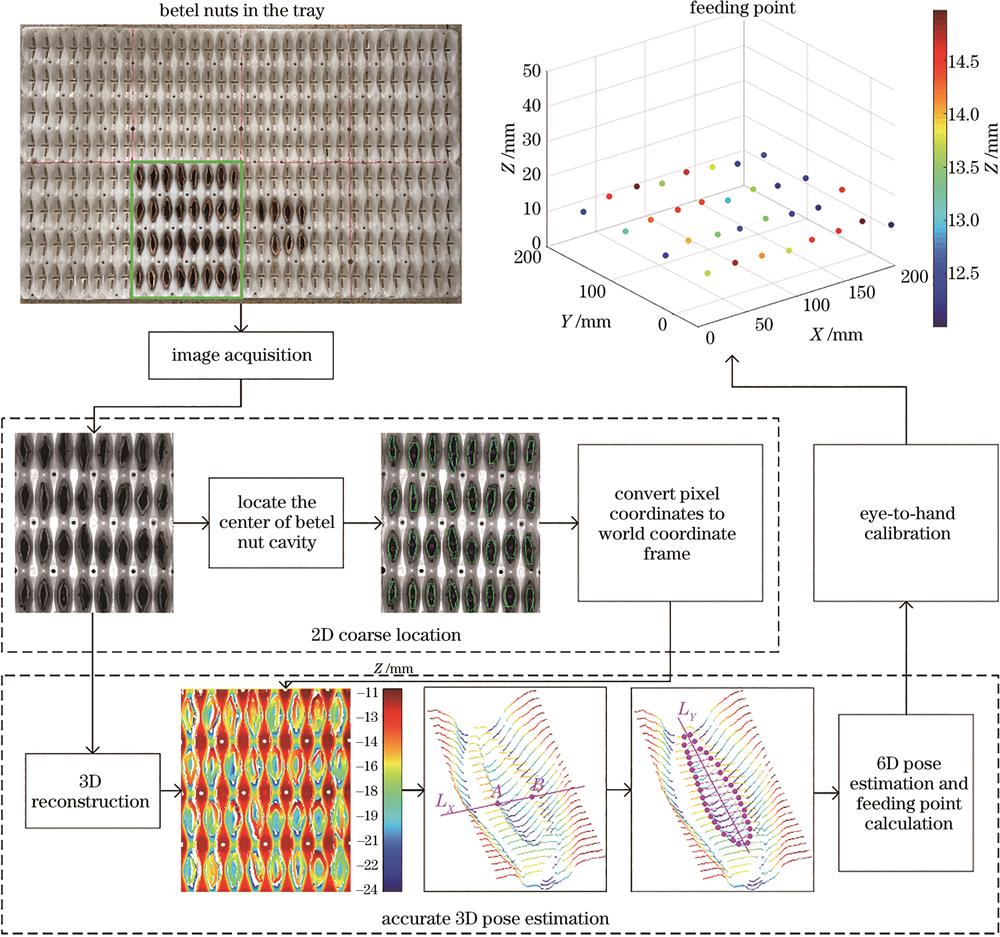

Fig. 2. Flow chart of betel nut poses recognition and localization

Fig. 3. The process of PSP 3D reconstruction

Fig. 4. Definition of attitude angles of betel nut and four types of betel nut. (a) Definition of attitude angles; (b) normal; (c) over-rolling; (d) upturned; (e) brine-stained

Fig. 5. Flow chart of attitude recognition and positioning algorithm

Fig. 6. Process of pose estimation

Fig. 7. Regional extraction demonstration of the betel nut brine zone

Fig. 8. Cross section of areca nut point cloud

Fig. 9. Examples of 3D reconstruction systems and robot calibration

Fig. 10. Confusion matrices

Fig. 11. Experimental platform

Fig. 12. Three groups of pose estimation experiment

Fig. 13. Three typical postures of the betel nut handling process. (a) Over-rolling; (b) upturned; (c) brine-stained

Fig. 14. Confusion matrix obtained by the proposed pose estimation algorithm

Fig. 15. Experimental process. (a) 3D reconstruction process; (b) acquisition process of actual coordinates of feeding points; (c) betel nuts to be fed

Fig. 16. Analysis of location experimental results. (a) Location of feeding point; (b) errors of X, Y, and Z coordinates

|

Table 1. Detection results obtained by the proposed 3D pose estimation algorithm

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 2. Pose estimation accuracy and work efficiency in 10 groups

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 3. Experimental results of the location accuracy evaluation

Set citation alerts for the article

Please enter your email address