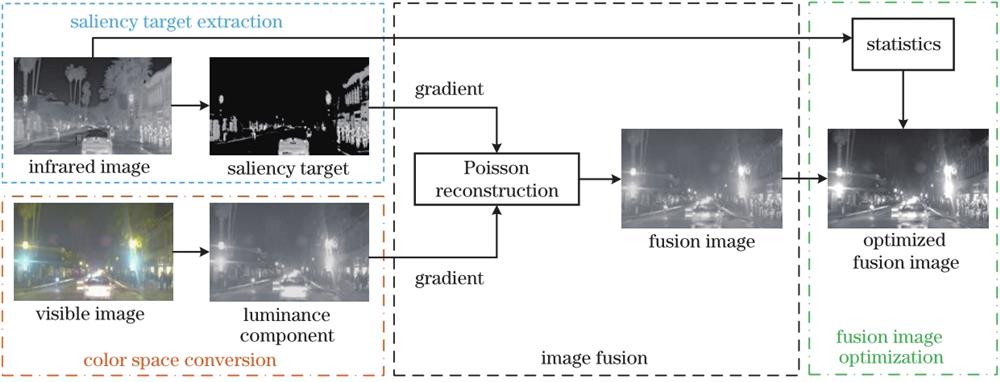

Wenqing Liu, Renhua Wang, Xiaowen Liu, Xin Yang. Infrared and Visible Image Fusion Method Based on Saliency Target Extraction and Poisson Reconstruction[J]. Laser & Optoelectronics Progress, 2023, 60(16): 1610012

Search by keywords or author

- Laser & Optoelectronics Progress

- Vol. 60, Issue 16, 1610012 (2023)

Abstract

Set citation alerts for the article

Please enter your email address