Anhu Li, Zhaojun Deng, Xingsheng Liu, Hao Chen. Research Progresses of Pose Estimation Based on Virtual Cameras[J]. Laser & Optoelectronics Progress, 2022, 59(14): 1415002

Search by keywords or author

- Laser & Optoelectronics Progress

- Vol. 59, Issue 14, 1415002 (2022)

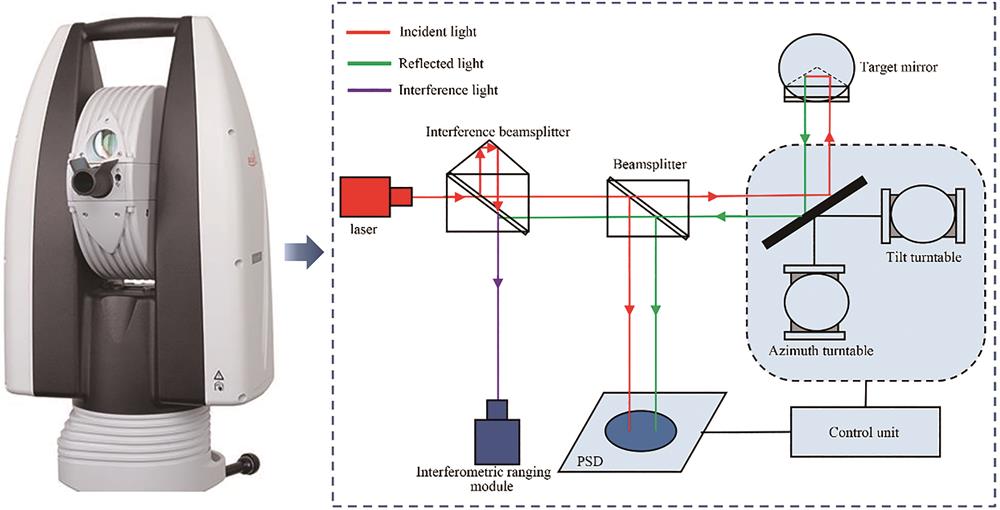

![Schematic of a laser tracker[20]](/richHtml/lop/2022/59/14/1415002/img_01.jpg)

Fig. 1. Schematic of a laser tracker[20]

![Basic schematic diagram of mainstream inertial-unit-based pose estimation system[32-33]. (a) Pose estimation system based on laser inertial units; (b) pose estimation system based on fiber optic inertial units; (c) pose estimation system based on MEMS inertial units](/richHtml/lop/2022/59/14/1415002/img_02.jpg)

Fig. 2. Basic schematic diagram of mainstream inertial-unit-based pose estimation system[32-33]. (a) Pose estimation system based on laser inertial units; (b) pose estimation system based on fiber optic inertial units; (c) pose estimation system based on MEMS inertial units

Fig. 3. Virtual camera imaging system based on a bipartite prism. (a) Virtual viewpoint imaging system[55]; (b) virtual camera imaging system[56]

Fig. 4. Virtual camera imaging system based on a micro-prism array[64-65]. (a) System schematic; (b) reconstruction principle; (c) beam propagation principle; (d) system composition; (e) traditional endoscopic imaging; (f) virtual camera imaging

Fig. 5. Virtual camera imaging system based on a grating diffraction[68]

Fig. 6. Mirror-based adjustable virtual camera imaging system[70-72]. (a) Single mirror; (b) double mirrors; (c) triple mirrors; (d) triple mirrors with a beam splitter; (e) four mirrors; (f) dual mirrors with a prismatic mirror

Fig. 7. Schematic illustration of 3D imaging using FMCW LiDAR[81]. (a) System layout; (b) multi-beam scanning mechanism using Risley prisms

Fig. 8. Pose estimation system based on dynamic virtual cameras[86]

Fig. 9. Basic principle of target pose estimation based on point feature[90]

Fig. 10. Pose estimation based on CAD template matching[104]

Fig. 11. Basic principle of pose estimation method based on graph neural network[133]

|

Table 1. Performance comparison of mainstream pose estimation systems

|

Table 2. Comparison of mainstream pose estimation methods

Set citation alerts for the article

Please enter your email address