[1] Bai Y B, Pan K L, Geng L. Signal processing of spatial convolutional neural network for laser ranging[J]. Chinese Journal of Lasers, 48, 2304001(2021).

[2] Wu T F, Zhou Q, Lin J R et al. Frequency scanning interferometry absolute distance measurement[J]. Chinese Journal of Lasers, 48, 1918002(2021).

[3] Xu G Q, Zhang Y F, Wan J W et al. Application of high-resolution three-dimensional imaging lidar[J]. Acta Optica Sinica, 41, 1628002(2021).

[4] Gao S, Bai L Z. Monocular camera-based three-point laser pointer ranging and pose estimation method[J]. Acta Optica Sinica, 41, 0915001(2021).

[5] Kang Y Q, Liu J, Wang Y et al. Low-dose CT 3D reconstruction using convolutional sparse coding and gradient L0-norm[J]. Acta Optica Sinica, 41, 0911005(2021).

[6] Chen J, Zhang Y Q, Song P et al. Application of deep learning to 3D object reconstruction from a single image[J]. Acta Automatica Sinica, 45, 657-668(2019).

[7] Eigen D, Puhrsch C, Fergus R. Depth map prediction from a single image using a multi-scale deep network[C], 2366-2374(2014).

[8] Eigen D, Fergus R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture[C], 2650-2658(2015).

[9] Zoran D, Isola P, Krishnan D et al. Learning ordinal relationships for mid-level vision[C], 388-396(2015).

[10] Cao Y, Wu Z F, Shen C H. Estimating depth from monocular images as classification using deep fully convolutional residual networks[J]. IEEE Transactions on Circuits and Systems for Video Technology, 28, 3174-3182(2018).

[11] Fu H A, Gong M M, Wang C H et al. Deep ordinal regression network for monocular depth estimation[C], 2002-2011(2018).

[12] Garg R, Kumar B G V, Carneiro G et al. Unsupervised CNN for single view depth estimation: geometry to the rescue[M]. Leibe B, Matas J, Sebe N, et al. Computer vision-ECCV 2016, 9912, 740-756(2016).

[13] Xian K, Zhang J M, Wang O et al. Structure-guided ranking loss for single image depth prediction[C], 608-617(2020).

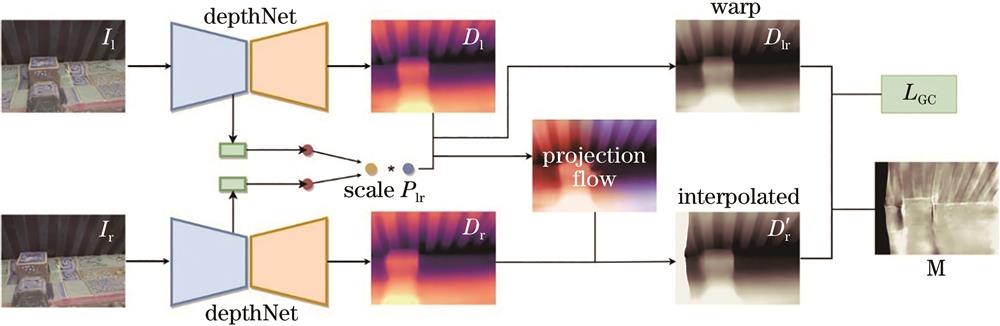

[14] Godard C, Aodha O M, Brostow G J. Unsupervised monocular depth estimation with left-right consistency[C], 6602-6611(2017).

[15] Zhou J S, Wang Y W, Qin K H et al. Moving indoor: unsupervised video depth learning in challenging environments[C], 8617-8626(2019).

[16] Bian J W, Li Z C, Wang N Y et al. Unsupervised scale-consistent depth and ego-motion learning from monocular video[EB/OL]. https://arxiv.org/abs/1908.10553v1

[17] Zhou T H, Brown M, Snavely N et al. Unsupervised learning of depth and ego-motion from video[C], 6612-6619(2017).

[18] Wang Z, Bovik A C, Sheikh H R et al. Image quality assessment: from error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 13, 600-612(2004).

[19] Godard C, Aodha O M, Firman M et al. Digging into self-supervised monocular depth estimation[C], 3827-3837(2019).

[20] Lowe D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 60, 91-110(2004).

[21] Fischler M A, Bolles R C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography[J]. Communications of the ACM, 24, 381-395(1981).

[22] Umeyama S. Least-squares estimation of transformation parameters between two point patterns[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 13, 376-380(1991).

[23] Paszke A, Gross S, Chintala S et al. Automatic differentiation in PyTorch[C](2017).

[24] Kingma D, Ba J. Adam: a method for stochastic optimization[EB/OL]. https://arxiv.org/abs/1412.6980

[25] Ranjan A, Jampani V, Balles L et al. Competitive collaboration: joint unsupervised learning of depth, camera motion, optical flow and motion segmentation[C], 12232-12241(2019).