[1] Yang B Y, Du X P, Fang Y Q et al. Review of rigid object pose estimation from a single image[J]. Journal of Image and Graphics, 26, 334-354(2021).

[2] Sahin C, Carcia-Hernando G, Sock J et al. A review on object pose recovery: from 3D bounding box detectors to full 6D pose estimators[J]. Image and Vision Computing, 96, 103898(2020).

[3] Du G G, Wang K, Lian S G et al. Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: a review[J]. Artificial Intelligence Review, 54, 1677-1734(2021).

[5] Chen W, Jia X, Chang H J et al. G2L-net: global to local network for real-time 6D pose estimation with embedding vector features[C], 4232-4241(2020).

[6] Wang G, Manhardt F, Tombari F et al. GDR-net: geometry-guided direct regression network for monocular 6D object pose estimation[C], 16606-16616(2021).

[7] Rad M, Lepetit V. BB8: a scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth[C], 3848-3856(2017).

[9] Peng S D, Zhou X W, Liu Y et al. PVNet: pixel-wise voting network for 6DoF object pose estimation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44, 3212-3223(2022).

[10] Qi C R, Liu W, Wu C X et al. Frustum PointNets for 3D object detection from RGB-D data[C], 918-927(2018).

[11] Chen H Y, Li L T, Chen P et al. 6D pose estimation network in complex point cloud scenes[J]. Journal of Electronics & Information Technology, 44, 1591-1601(2022).

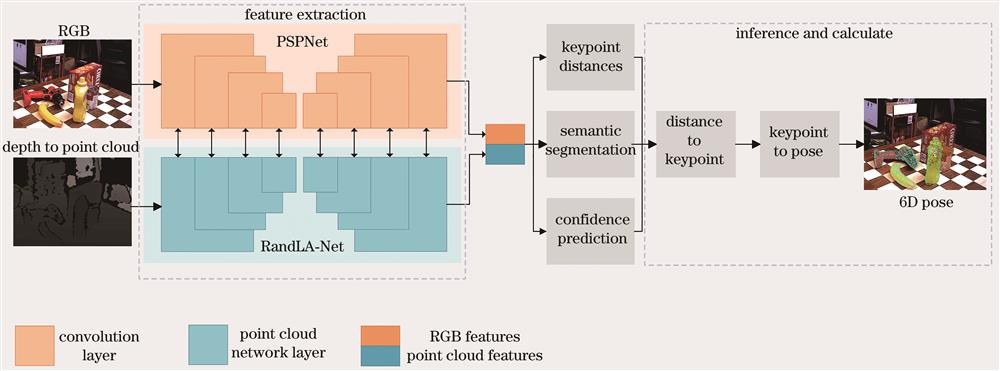

[12] Wang C, Xu D F, Zhu Y K et al. DenseFusion: 6D object pose estimation by iterative dense fusion[C], 3338-3347(2019).

[13] Zhao H S, Shi J P, Qi X J et al. Pyramid scene parsing network[C], 6230-6239(2017).

[14] Hu Q Y, Yang B, Xie L H et al. RandLA-net: efficient semantic segmentation of large-scale point clouds[C], 11105-11114(2020).

[15] He K M, Zhang X Y, Ren S Q et al. Deep residual learning for image recognition[C], 770-778(2016).

[16] Huang G, Liu Z, van der Maaten L et al. Densely connected convolutional networks[C], 2261-2269(2017).