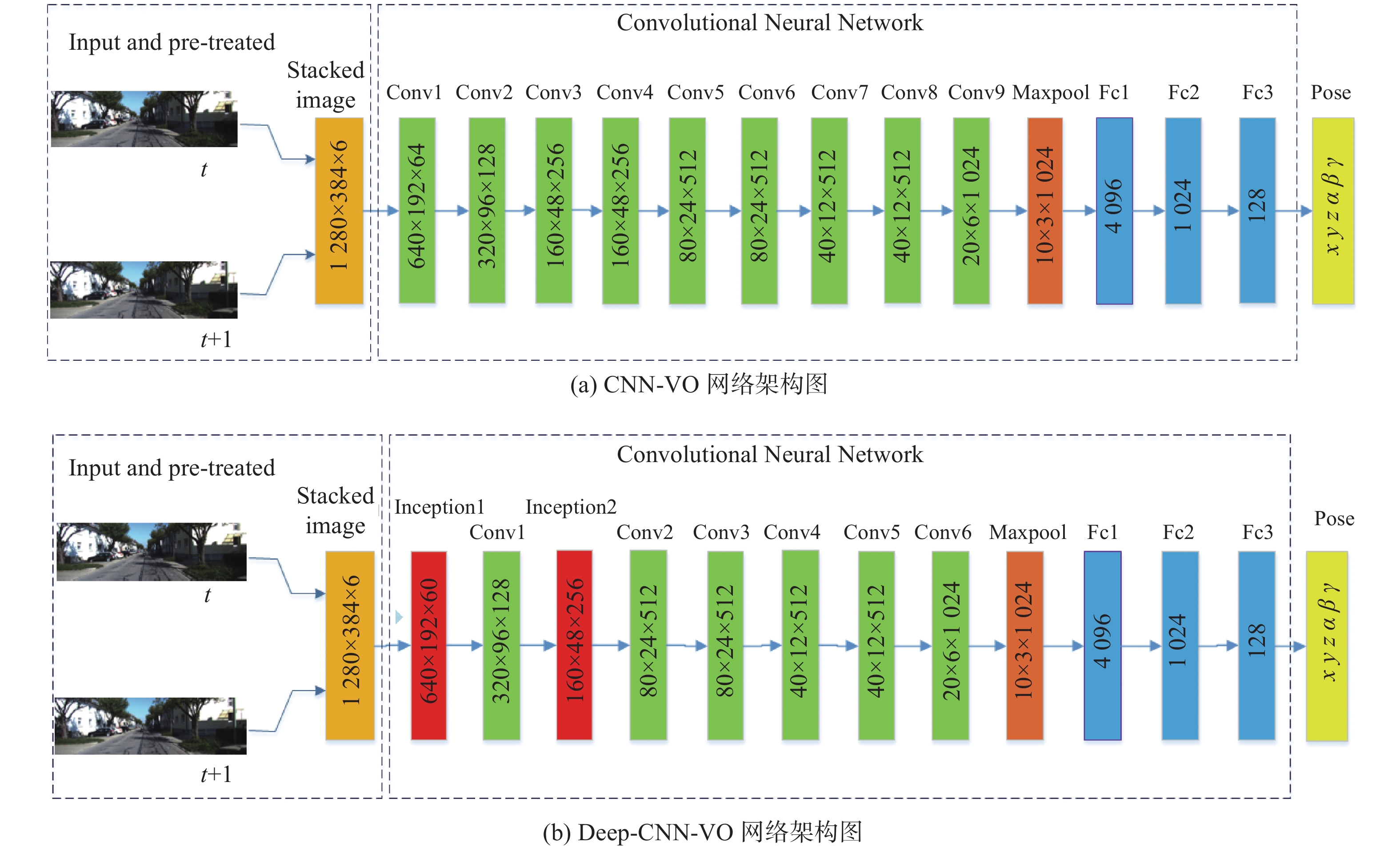

Jianpeng SU, Yingping HUANG, Bogan ZHAO, Xing HU. Research on visual odometry using deep convolution neural network[J]. Optical Instruments, 2020, 42(4): 33

Search by keywords or author

- Optical Instruments

- Vol. 42, Issue 4, 33 (2020)

Abstract

Set citation alerts for the article

Please enter your email address