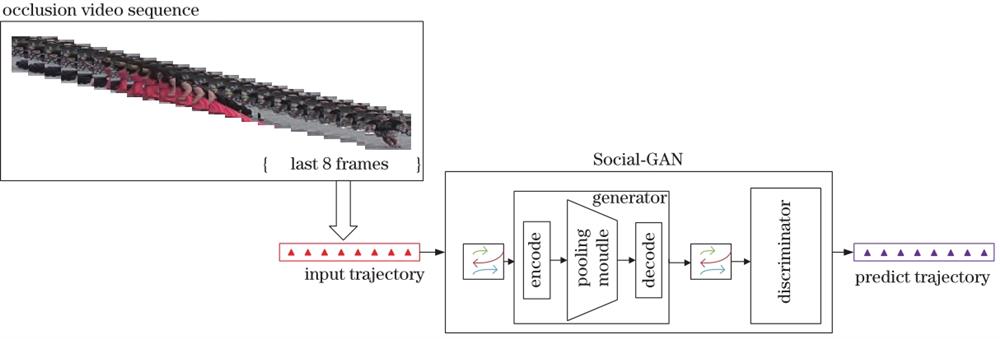

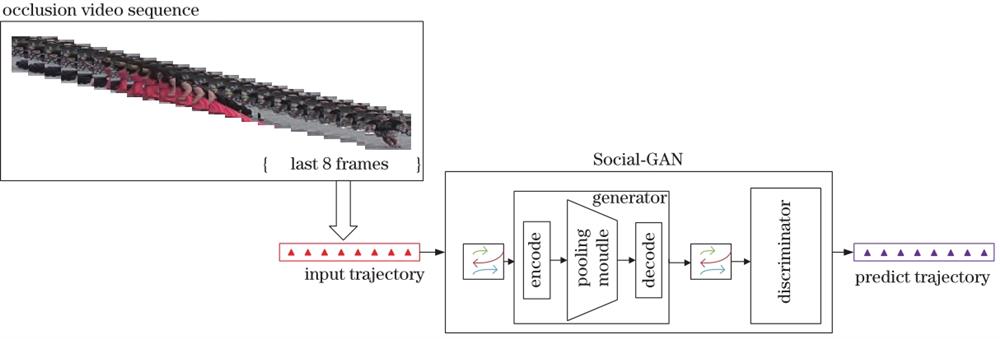

Input: MARS_traj dataset;trajectory prediction model Social- GAN;video-based person re-identification model Output: mAP and Rank-k 1)spatial coordinates and temporal information of person ID from query dataset of video sequences are input into Social-GAN model; 2)possible predicted trajectories are generated by generator in Social-GAN based on spatial coordinates and temporal information; 3)discriminator in Social-GAN discriminates generated predicted trajectory,and obtains matching predicted trajectory dataset query_pred. 4)For do 5) For do 6) temporal fusion loss and spatial fusion loss between -th video sequence in gallery and -th video prediction trajectory in query_pred are computed by equation(3)and(4),respectively; 7) end 8) 9) value of corresponding to is obtained and assigned to ; 10) sent -th video sequence of gallery into query_TP; 11)end 12)fusion feature of query_TP and gallery are extracted,respectively; 13)feature distance metrics based on query_TP and gallery are calculated,and feature vectors of all gallery video sequences are ranked according to distance metrics; 14)probability of correct match within ranked gallery is calculated according to query; 15)return mAP and Rank-k. |