Yan Guo, Hong-Chen Liu, Fu-Jiang Liu, Wei-Hua Lin, Quan-Sen Shao, Jun-Shun Su. Chinese named entity recognition with multi-network fusion of multi-scale lexical information[J]. Journal of Electronic Science and Technology, 2024, 22(4): 100287

Search by keywords or author

- Journal of Electronic Science and Technology

- Vol. 22, Issue 4, 100287 (2024)

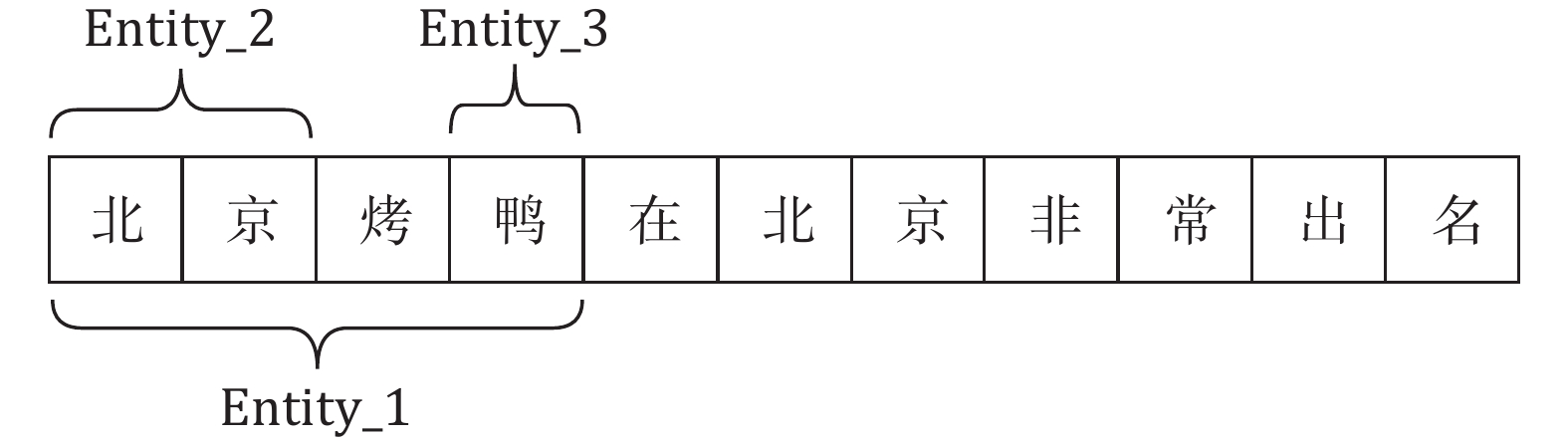

Fig. 1. An example of nested entities.

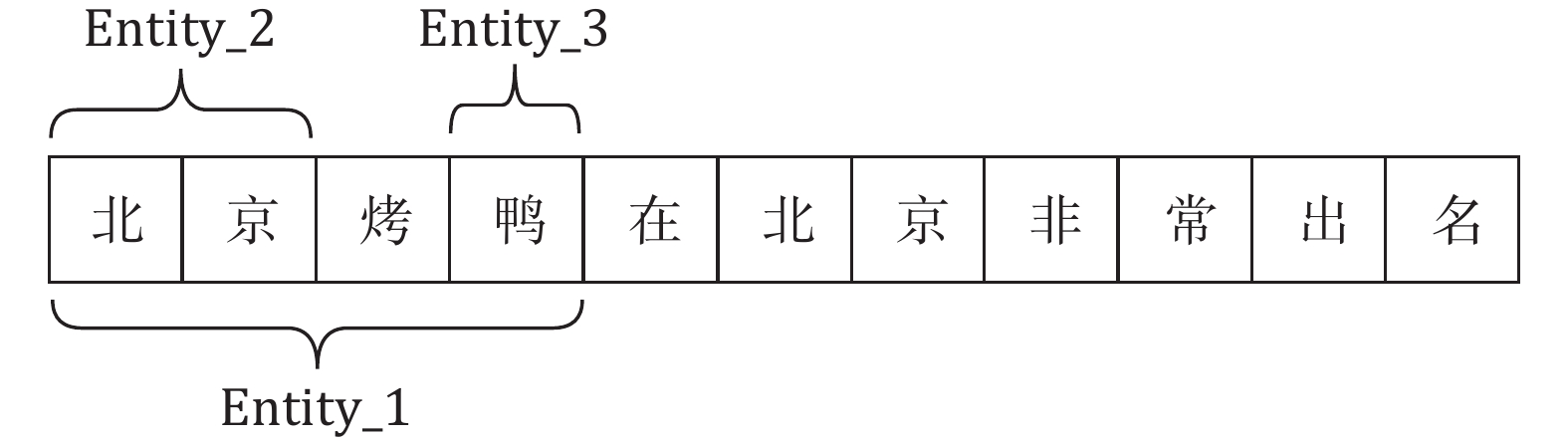

Fig. 2. An example of an ambiguous entity word.

Fig. 3. Comprehensive architecture of the BCWC model, consisting of four main layers: Embedding layer, feature extraction layer, feature fusion layer, and CRF layer. The embedding layer incorporates BERT and word embedding models for character and word embeddings, respectively. In the feature extraction layer, varied colored dashed boxes represent the range of word sequences captured by convolutional kernels of different scale sizes. The outcomes of these convolutions are seamlessly concatenated to form the final output. Subsequently, the feature fusion layer employs a multi-head attention mechanism for word weighting, culminating in the transmission of the output to the CRF layer for decoding. This layered structure ensures a comprehensive and efficient approach to information processing in the BCWC model.

Fig. 4. Multi-scale IDCNN process diagram. The input consists of word embedding vectors. The diagram showcases two different scale iterative dilated convolution blocks. When , a regular convolution kernel is used, and when , the dilated convolution is employed. Each convolution block comprises two stacked layers. The results are concatenated and activated using the ReLU function, followed by normalization with LayerNorm. The outputs from different scales are concatenated to obtain the final output.

Fig. 5. [in Chinese]

Fig. 6. Correctly identified named entities of the sample.

Fig. 7. Tokenization and multi-scale convolution kernel capturing process.

Fig. 8. Entity recognition under the same information capture window size.

Fig. 9. Results of different models on the three datasets.

Fig. 10. Quantitative analysis of different structures: Results of IDCNN (a) with different structures on various datasets and (b) with the same structure but different convolutional kernel sizes on various datasets. In the notation m×n , m represents the number of iterative layers and n represents the size of the DCNN blocks.

Fig. 11. Comparison of different structures on three datasets.

|

Table 1. Some common hyperparameter settings about the experiments.

|

Table 1. Statistics of datasets.

|

Table 2. Some hyperparameter settings about the experiments on the three datasets.

|

Table 2. Performance on CLUENER.

|

Table 3. Some hardware and software environments about the experiments.

|

Table 3. Performance on Weibo.

|

Table 4. Performance on Youku.

| |||||||||||||||||||||||||||||||||||||||||||

Table 5. F 1-score results of the ablation study.

Set citation alerts for the article

Please enter your email address