As remote sensing technology continues to develop and high-resolution images are widely used, the application of remote sensing change detection is increasing in environmental monitoring, urban planning, and disaster assessment. Especially when applying high-resolution remote sensing images, accurate detection of surface changes is crucial for revealing environmental change trends and supporting decision-making. Timely and accurate identification of surface changes not only helps improve post-disaster reconstruction, ecological restoration, and resource management but also provides essential supporting information for governments and related departments. However, existing change detection methods still face challenges, such as insufficient local feature extraction, difficulty in modeling global dependencies, and excessive computational complexity when handling large-scale, high-resolution images. Additionally, they are easily affected by light and seasonal variations, which leads to pseudo-changes, false detections, or omissions. To overcome these challenges, we propose a remote sensing image change detection network based on change guidance and bidirectional Mamba (CGBMamba). By combining the long-range dependency modeling capability of the bidirectional Mamba module with the a priori knowledge of deep change-guided features, CGBMamba effectively fuses local details with global features to improve change detection accuracy. Additionally, we design a time domain fusion module to further enhance the model's ability to capture temporal changes by fusing bi-phasic features through a cross-temporal gating mechanism. CGBMamba reduces the effect of noise or detail errors on change detection by introducing smoothed residuals, which boosts the model’s robustness in complex scenes. This method addresses the limitations of existing change detection techniques in local information extraction and global dependency modeling for high-resolution remote sensing images. It also strengthens the model's resistance to external factors such as illumination and seasonal disturbances.

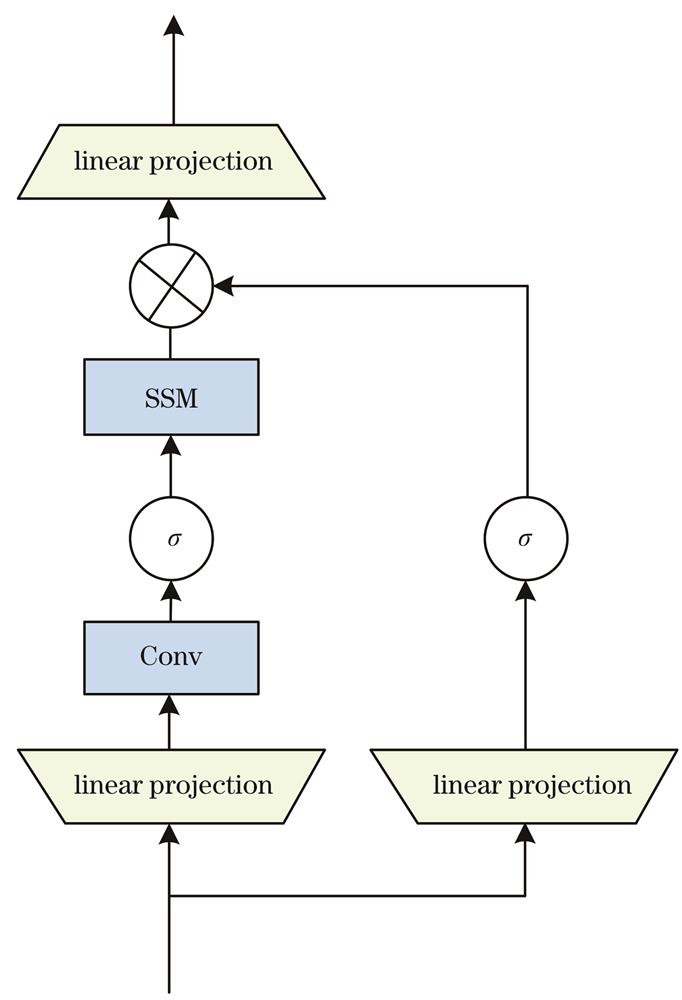

The CGBMamba network utilizes a two-channel encoder-decoder architecture, consisting of two main components: the time domain fusion module (TAM) and the bidirectional Mamba module, as illustrated in Fig. 2. Initially, a two-branch shared-weight encoder processes dual time-phase images, with each branch employing ResNet18 for feature extraction. Next, the time domain fusion module merges features from different time phases and scales to capture the change information. This module leverages the complementary information from the dual-time-phase images to generate a fused feature map that is rich in change-related features. Simultaneously, the deepest features from the fused map are passed to the classifier for prediction. The resulting deep change feature map is then sent to the bidirectional Mamba module, along with the fused feature map. As a core module, the bidirectional Mamba module refines the time domain information and integrates local features with global dependencies to improve change detection accuracy. It strengthens the interrelationships between time domains through bidirectional transfer and extracts deep change features through multilevel information fusion.

To validate the effectiveness of our method, we conduct experiments on three remote sensing change detection datasets: 1) LEVIR-CD dataset. Captured by the Gaofen-2 satellite, this dataset contains change samples from several urban areas with a resolution of 0.5 m. Our method achieves an F1 score of 90.42% and an intersection over union (IOU) of 82.51%, significantly outperforming other comparative methods, particularly when handling small and detailed targets. The visualization results demonstrate that our method can effectively identify complex changing regions, especially in the presence of shadows and color variations, exhibiting greater robustness. 2) CLCD dataset. This dataset includes building change samples under varying environmental conditions, with a resolution of 0.8 m. Our method achieves an F1 score of 75.15% and an IOU of 60.20%, providing higher accuracy and robustness than other methods, particularly in the presence of small targets and complex backgrounds. The visualization results further highlight our method's superior ability to accurately identify changing targets in color-similar regions. 3) SYSU-CD dataset. This dataset features irregularly shaped and complexly distributed changing targets, with a resolution of 1 m. Our method achieves an F1 score of 81.34% and an IOU of 67.35%, demonstrating strong performance in detecting irregularly shaped targets and recognizing edges. Compared to other methods, our model effectively addresses data imbalance and maintains efficient detection performance across a wide range of targets with varying shapes and sizes. The visualization results from the three datasets indicate that the introduction of TAM at the encoder stage significantly improves the model's ability to recognize changing regions by enhancing the fusion of features across different time stages. This is especially beneficial in cases involving small changing regions or complex backgrounds, which can enable the model to capture more detailed change information. In the decoder stage, BMamba helps the model recognize change targets of different scales and shapes in complex scenes by processing both long- and short-range dependencies, thereby strengthening the feature correlations. Our method outperforms the selected comparison methods when benchmarked against eight classical change detection methods. Additionally, ablation studies across the three datasets further validate the effectiveness of each module in boosting overall performance.

We propose a change detection method for remote sensing images based on CGBMamba, which improves the detection of complex change targets by combining deep change guidance features with bidirectional Mamba modules. The change guidance leverages a priori information to guide the fusion of multi-scale features, ensuring that critical change information is preserved. Meanwhile, the bidirectional Mamba module optimizes the fusion of local details and global features by capturing long-range dependencies, thus improving the accuracy and efficiency of change detection on large-scale, high-resolution remote sensing images. Additionally, the time domain fusion module further boosts the performance of CGBMamba in handling dual-time-phase changes by accurately capturing subtle change features through cross-time and cross-scale mechanisms, while minimizing the impact of noise and detail errors on detection results.

AI Video Guide

AI Video Guide  AI Picture Guide

AI Picture Guide AI One Sentence

AI One Sentence