Synthetic aperture radar (SAR) technology is crucial for ship detection in both military and civilian applications, which offers imaging capabilities in all weather conditions, day or night. However, SAR images present unique challenges due to the multi-scale characteristics of ships, varying vessel sizes, complex backgrounds, and speckle noise. These factors often lead to significant loss of semantic information, especially for small targets, which hinder the performance of conventional ship detectors. The limited computing resources on remote sensing platforms further exacerbate this issue. As a result, there is a strong need for algorithms that are both accurate and computationally efficient. Therefore, we aim to address the limitations of current ship detection algorithms by designing a novel, lightweight, and robust architecture that emphasizes the accurate detection of small targets in complex SAR imagery. We introduce an advanced approach that strikes an optimal balance between precision and computational efficiency, specifically for better detection of multi-scale objects, while maintaining low model complexity and ensuring feasibility for deployment on resource-constrained platforms.

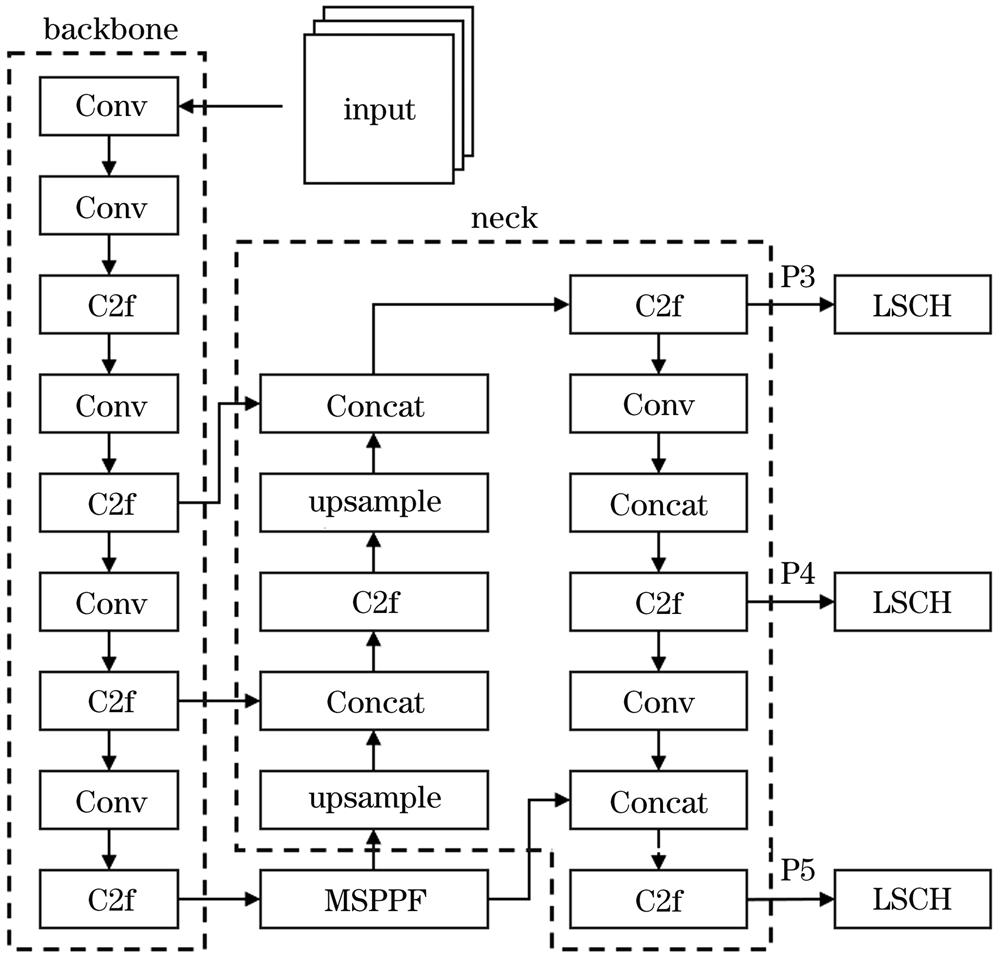

To tackle these complexities, we propose the multi-scale spatial pyramid pooling fast (MSPPF) module. This module is specifically designed to address the loss of multi-scale semantic information caused by successive pooling operations. MSPPF simultaneously performs pooling operations at multiple scales on input feature maps, enabling the network to preserve fine-grained spatial information while capturing contextual information across various scales. To further enhance feature extraction, we incorporate the efficient multi-scale attention (EMA) mechanism, which captures channel-specific semantic information across scales and uses it for adaptive feature aggregation. By reweighting features and using local contextual awareness, EMA improves the robustness of feature extraction for ships at different scales, including small vessels that are often difficult to identify with high precision in SAR images. We also propose a lightweight shared convolution head (LSCH) to reduce parameters and computational overhead. By extensively sharing convolutional operations in LSCH, we can enhance detection performance via group normalization to optimize location and classification, while using far fewer parameters and simpler computations. This results in a significantly lighter, faster, and more resource-efficient architecture. Finally, a key component of our approach is the improved bounding box loss function, which combines ShapeIoU and Focaler-IoU. ShapeIoU focuses on the shape and overlap of the boxes, while Focaler-IoU targets underestimating bounding boxes and hard examples, particularly smaller objects that have higher prediction errors. This new loss function focuses on object size and aspect ratio differences in predictions with a high level of accuracy while further emphasizing improvements to smaller targets. By addressing both the geometric accuracy of the bounding boxes and the challenging examples, this combination significantly enhances our model's performance, especially for small targets that are typically difficult to predict.

We thoroughly validate our proposed method using the HRSID and SSDD datasets, which are widely recognized benchmarks for SAR-based ship detection. The experimental results demonstrate that our algorithm significantly outperforms the baseline YOLOv8 model, showing improved performance on key metrics while requiring substantially fewer computational resources. Specifically, on the HRSID dataset, our algorithm achieves a 1.8% improvement in average precision (AP) at the 0.5∶0.95 intersection over union (IoU) threshold, alongside a 23.3% reduction in parameters and a 23.2% decrease in computational costs (Table 1). On the SSDD dataset, the results are similarly impressive, with a 3.1% AP improvement at the same threshold, and again, a 23.3% reduction in parameters and a 23.2% reduction in computational costs. This improved detection, especially for small vessels with unusual aspect ratios, is primarily due to our innovative approaches for small target detection in remote sensing, particularly through the Focaler-ShapeIoU loss. These results are attributed to the synergy between MSPPF and EMA, which facilitate better capture of multiscale information and more effective handling of challenging examples (Fig. 5). The LSCH design ensures an optimal balance between detection quality and model complexity. The experimental results clearly show how much we have improved in detecting small and difficult targets, while also reducing parameters and computations, without sacrificing accuracy for multi-scaled vessels (Table 2). The novel bounding box loss function, combining ShapeIoU and Focaler-IoU, enables the accurate prediction of small objects with high aspect ratios. This highlights its effectiveness in improving small object detection, which is more critical than improving general object detection, thus better addressing real-world needs in SAR-based ship detection scenarios. Furthermore, generalization experiments are conducted with YOLOv8n and the algorithm presented in this paper across various datasets. The results show that our algorithm outperforms traditional methods across different datasets and resolutions, thereby validating its generalizability (Table 4).

We introduce a novel, lightweight, multi-scale SAR ship detection algorithm that addresses the challenges of multi-scale ship variations, complex backgrounds, and limited computing resources in remote sensing. By integrating the MSPPF with EMA for effective multi-scale feature extraction, utilizing the lightweight LSCH design to minimize computational cost, and incorporating a custom-designed bounding box loss function that improves small target predictions, our model strikes an optimal balance between high detection accuracy and significantly reduced model complexity. Our proposed technique can be effectively applied to diverse ship scales, achieving an optimal balance between model parameters, performance, and computational demands, as evidenced by improved overall accuracy and enhanced detection of small targets. We provide a new paradigm in SAR ship detection by offering increased precision in detection while simultaneously reducing parameters, computational complexity, and resource requirements. This is particularly evident in the improved detection of smaller, more difficult-to-identify targets through the combination of our new modules and bounding box loss functions. This innovative methodology provides a resource-efficient solution, enabling more widespread deployment and contributing to practical applications for SAR-based ship detection. It allows for faster and more accurate analysis with limited computational capabilities and serves as a key stepping stone toward further deployment on remote platforms.

AI Video Guide

AI Video Guide  AI Picture Guide

AI Picture Guide AI One Sentence

AI One Sentence