Yan Li, Tai-Kang Tian, Meng-Yu Zhuang, Yu-Ting Sun. De-biased knowledge distillation framework based on knowledge infusion and label de-biasing techniques[J]. Journal of Electronic Science and Technology, 2024, 22(3): 100278

Search by keywords or author

- Journal of Electronic Science and Technology

- Vol. 22, Issue 3, 100278 (2024)

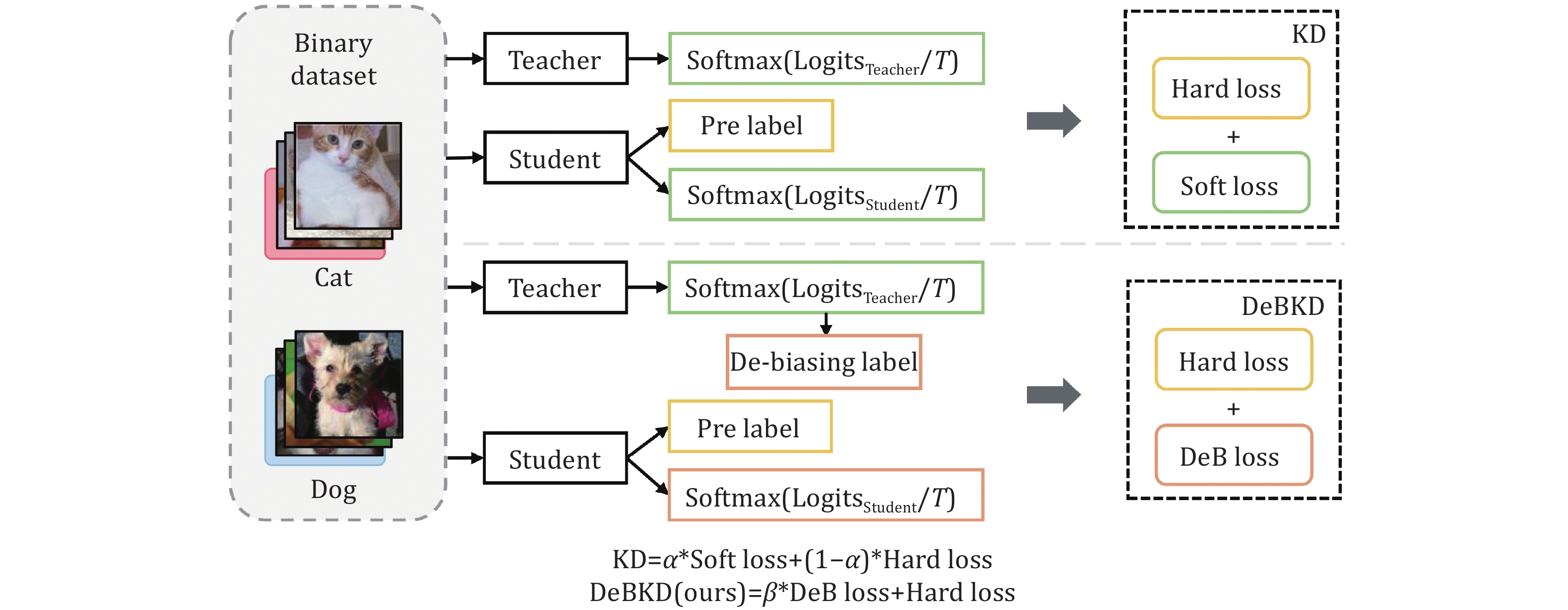

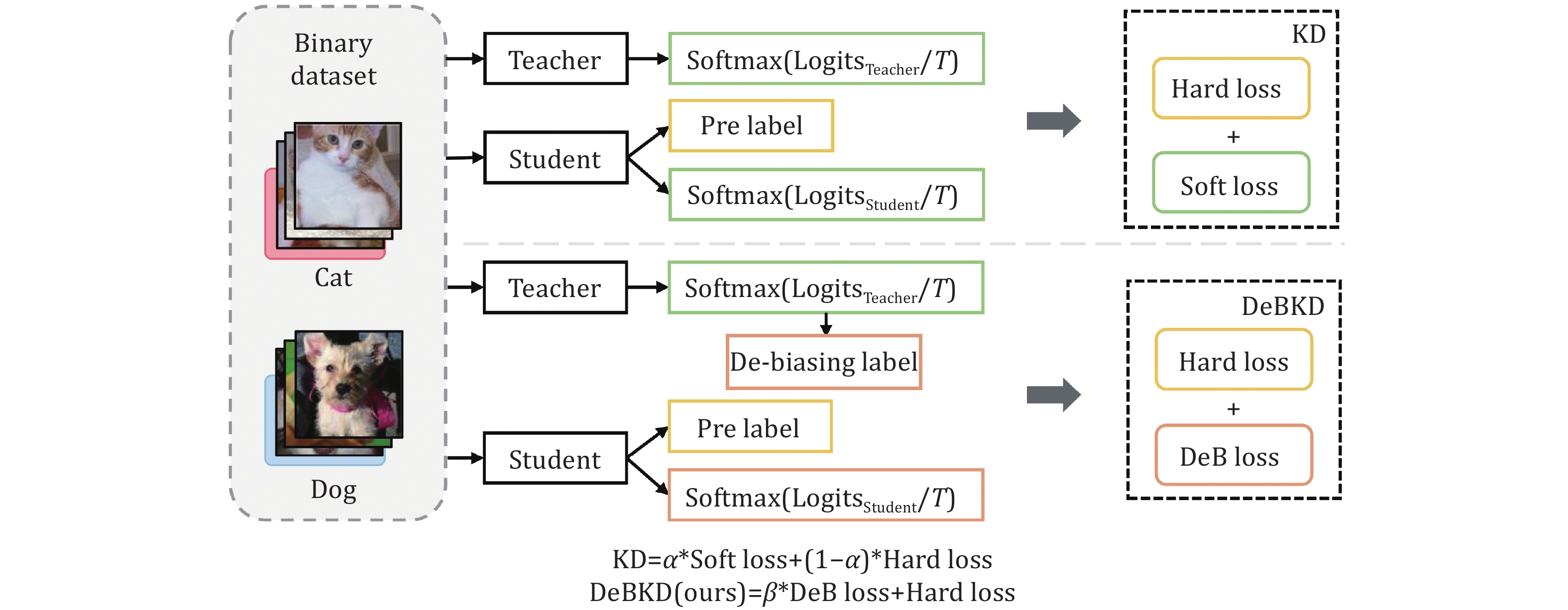

Fig. 1. Illustration of the classical knowledge distillation (KD) and our de-biased knowledge distillation (DeBKD).

Fig. 2. Workflow of the proposed de-biased knowledge distillation framework.

Fig. 3. Process of knowledge infusion.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 1. Comparison of distillation effects across different datasets.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 2. Impact of different learning freedom on the classification accuracy.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 3. Impact of knowledge infusion (KI) on model performance (Δ indicates the change in performance).

Set citation alerts for the article

Please enter your email address