[1] LeCun Y., Bengio Y., Hinton G.. Deep learning. Nature, 521, 436-444(2015).

[2] P.F. Zhang, J.S. Duan, Z. Huang, H.Z. Yin, Jointteaching: Learning to refine knowledge f resourceconstrained unsupervised crossmodal retrieval, in: Proc. of the 29th ACM Intl. Conf. on Multimedia, Chengdu, China, 2021, pp. 1517–1525.

[3] Ye G.-H., Yin H.-Z., Chen T., Xu M., Nguyen Q.V.H., Song J.. Personalized on-device e-health analytics with decentralized block coordinate descent. IEEE J. Biomed. Health, 27, 5249-5259(2022).

[4] Y.T. Sun, G.S. Pang, G.H. Ye, T. Chen, X. Hu, H.Z. Yin, Unraveling the ‘anomaly’ in time series anomaly detection: A selfsupervised tridomain solution, in: Proc. of 2024 IEEE 40th Intl. Conf. on Data Engineering, Utrecht, herls, 2024, pp. 981–994.

[5] P.F. Zhang, Z. Huang, X.S. Xu, G.D. Bai, Effective robust adversarial training against data label cruptions, IEEE T. Multimedia (May 2024), DOI: 10.1109TMM.2024.3394677.

[7] C. Buciluǎ, R. Caruana, A. NiculescuMizil, Model compression, in: Proc. of the 12th ACM SIGKDD Intl. Conf. on Knowledge Discovery Data Mining, Philadelphia, USA, 2006, pp. 535–541.

[8] Li Z., Li H.-Y., Meng L.. Model compression for deep neural networks: A survey. Computers, 12, 60:1-22(2023).

[10] H. Pham, M. Guan, B. Zoph, Q. Le, J. Dean, Efficient neural architecture search via parameters sharing, in: Proc. of the 35th Intl. Conf. on Machine Learning, Stockholm, Sweden, 2018, pp. 4095–4104.

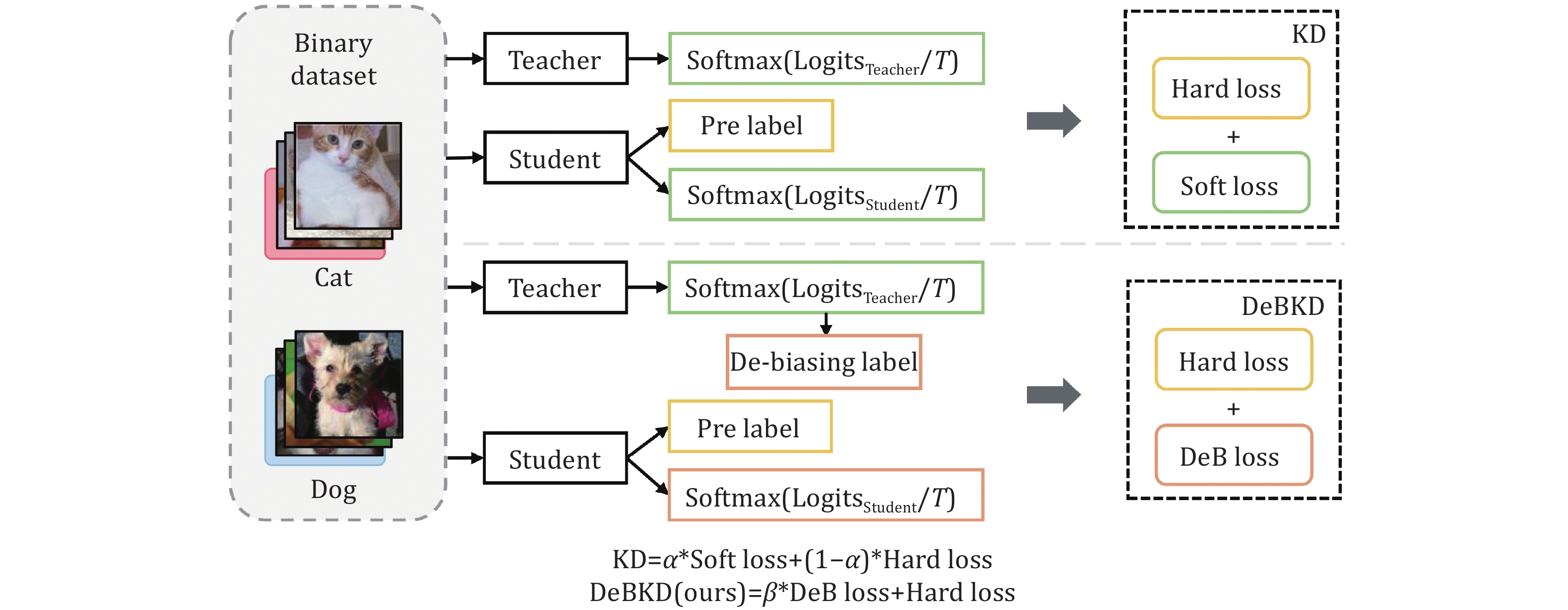

[11] G. Hinton, O. Vinyals, J. Dean, Distilling the knowledge in a neural wk [Online]. Available, https:arxiv.gabs1503.02531, March 2015.

[13] M. Phuong, C. Lampert, Towards understing knowledge distillation, in: Proc. of the 36th Intl. Conf. on Machine Learning, Long Beach, USA, 2019, pp. 5142–5151.

[14] X. Cheng, Z.F. Rao, Y.L. Chen, Q.S. Zhang, Explaining knowledge distillation by quantifying the knowledge, in: Proc. of the IEEECVF Conf. on Computer Vision Pattern Recognition, Seattle, USA, 2020, pp. 12922–12932.

[15] A. Gotmare, N.S. Keskar, C.M. Xiong, R. Socher, A closer look at deep learning heuristics: Learning rate restarts, warmup distillation [Online]. Available, https:arxiv.gabs1810.13243, October 2018.

[16] A. Romero, N. Ballas, S.E. Kahou, A. Chassang, C. Gatta, Y. Bengio, Fits: Hints f thin deep s, in: Proc. of the 3rd Intl. Conf. on Learning Representations, San Diego, USA, 2015, pp. 1–13.

[17] K.M. He, X.Y. Zhang, S.Q. Ren, J. Sun, Deep residual learning f image recognition, in: Proc. of the IEEE Conf. on Computer Vision Pattern Recognition, Las Vegas, USA, 2016, pp. 770–778.

[19] P.F. Zhang, Z. Huang, G.D. Bai, X.S. Xu, IDEAL: Highderensemble adaptation wk f learning with noisy labels, in: Proc. of the 30th ACM Intl. Conf. on Multimedia, Lisboa, Ptugal, 2022, pp. 325–333.

[20] Z.X. Xu, P.H. Wei, W.M. Zhang, S.G. Liu, L. Wang, B. Zheng, UKD: Debiasing conversion rate estimation via uncertaintyregularized knowledge distillation, in: Proc. of the ACM Web Conf., Lyon, France, 2022, pp. 2078–2087.

[21] Z.N. Li, Q.T. Wu, F. Nie, J.C. Yan, GraphDE: A generative framewk f debiased learning outofdistribution detection on graphs, in: Proc. of the 36th Intl. Conf. on Neural Infmation Processing Systems, New leans, USA, 2024, pp. 2195:1–14.

[22] Y. Cao, Z.Y. Fang, Y. Wu, D.X. Zhou, Q.Q. Gu, Towards understing the spectral bias of deep learning, in: Proc. of the 30th Intl. Joint Conf. on Artificial Intelligence, Montreal, Canada, 2021, pp. 2205–2211.

[23] Y. Guo, Y. Yang, A. Abbasi, AutoDebias: Debiasing masked language models with automated biased prompts, in: Proc. of the 60th Annu. Meeting of the Association f Computational Linguistics (Volume 1: Long Papers), Dublin, Irel, 2022, pp. 1012–1023.

[24] T. Schnabel, A. Swaminathan, A. Singh, N. Chak, T. Joachims, Recommendations as treatments: Debiasing learning evaluation, in: Proc. of the 33rd Intl. Conf. on Machine Learning, New Yk, USA, 2016, pp. 1670–1679.

[25] J.W. Chen, H.D. Dong, Y. Qiu, et al., AutoDebias: Learning to debias f recommendation, in: Proc. of the 44th Intl. ACM SIGIR Conf. on Research Development in Infmation Retrieval, Virtual Event, 2021, pp. 21–30.

[26] K. Zhou, B.C. Zhang, X. Zhao, J.R. Wen, Debiased contrastive learning of unsupervised sentence representations, in: Proc. of the 60th Annu. Meeting of the Association f Computational Linguistics (Volume 1: Long Papers), Dublin, Irel, 2022, pp. 6120–6130.

[27] B. Heo, J. Kim, S. Yun, H. Park, N. Kwak, J.Y. Choi, A comprehensive overhaul of feature distillation, in: Proc. of the IEEECVF Intl. Conf. on Computer Vision, Seoul, Kea (South), 2019, pp. 1921–1930.

[28] W. Park, D. Kim, Y. Lu, M. Cho, Relational knowledge distillation, in: Proc. of the IEEECVF Conf. on Computer Vision Pattern Recognition, Long Beach, USA, 2019, pp. 3962–3971.

[29] B.R. Zhao, Q. Cui, R.J. Song, Y.Y. Qiu, J.J. Liang, Decoupled knowledge distillation, in: Proc. of the IEEECVF Conf. on Computer Vision Pattern Recognition, New leans, USA, 2022, pp. 11943–11952.

[30] H.L. Zhou, L.C. Song, J.J. Chen, et al., Rethinking soft labels f knowledge distillation: A biasvariance tradeoff perspective, in: Proc. of the 9th Intl. Conf. on Learning Representations, Virtual Event, 2021, pp. 1–15.