[1] Li D R, Li M. Research advance and application prospect of unmanned aerial vehicle remote sensing system[J]. Geomatics and Information Science of Wuhan University, 39, 505-513, 540(2014).

[4] Zhang Y J. Geometric processing of low altitude remote sensing images captured by unmanned airship[J]. Geomatics and Information Science of Wuhan University, 34, 284-288(2009).

[13] Gao X, Zhang T, Liu Y et al[M]. Fourteen lectures on visual SLAM: from theory to practice(2017).

[22] Eade E. Lie groups for 2D and 3D transformations[EB/OL]. https:∥ethaneade.org/lie.pdf

[23] Rusu R B, Cousins S. 3D is here: point cloud library (PCL)[C], 9-13(2011).

[30] Rusinkiewicz S, Levoy M. Efficient variants of the ICP algorithm[C], 145-152(1).

[36] Segal A V, Haehnel D, Thrun S. Generalized-ICP[M]. Robotics, 161-168(2010).

[37] Serafin J, Grisetti G. NICP: dense normal based point cloud registration[C], 742-749(2015).

[41] Anderson S, Barfoot T D. RANSAC for motion-distorted 3D visual sensors[C], 2093-2099(2013).

[43] Anderson S, Barfoot T D. Towards relative continuous-time SLAM[C], 1033-1040(2013).

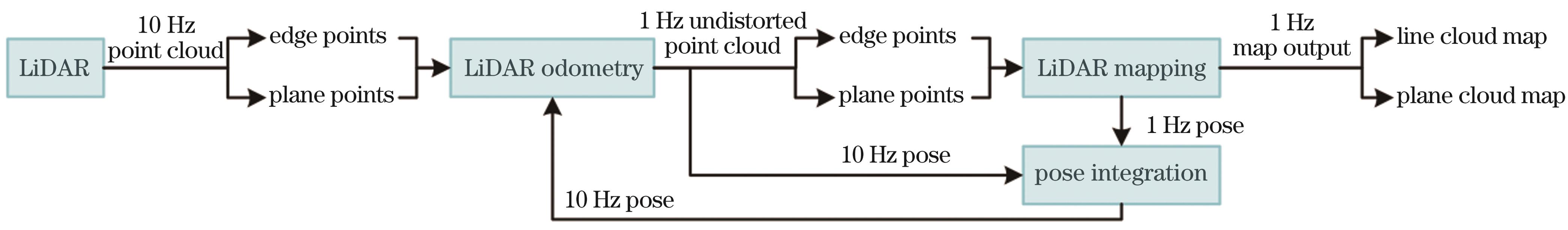

[44] Zhang J, Singh S. LOAM: lidar odometry and mapping in real-time[C], 12-16(2014).

[78] Barfoot T D[M]. State estimation for robotics(2017).

[84] Geneva P, Eckenhoff K, Yang Y L et al. LIPS: LiDAR-inertial 3D plane SLAM[C], 123-130(2018).

[93] Wang J[M]. Geometric structure of high-dimensional data and dimensionality reduction(2011).

[102] Thrun S, Burgard W, Fox D[M]. Probabilistic robotics(2005).

[109] Yang Y F, Zhang A, Guo Y C et al. Influence of target surface BRDF on non-line-of-sight imaging[J]. Laser & Optoelectronics Progress, 61, 1811003(2024).